Objective and use case

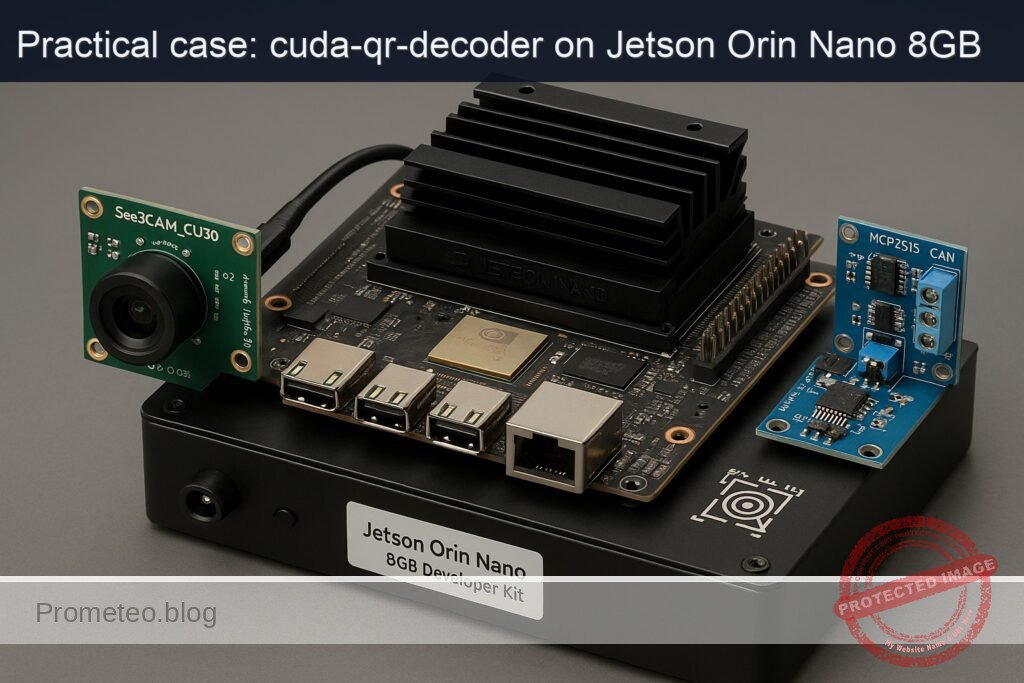

What you’ll build: A CUDA-accelerated QR decoding pipeline on a Jetson Orin Nano 8GB that streams from a See3CAM_CU30 UVC camera, performs GPU-side preprocessing, decodes QR payloads, and publishes results over SocketCAN via an MCP2515 CAN module.

Why it matters / Use cases

- High-speed conveyor scanning: low-latency decode and CAN publish for PLC-based sorting.

- Edge inventory kiosk: on-device QR validation; outcome sent over CAN to gate/door controllers.

- Factory WIP traceability: robust GPU binarization for tough lighting; results broadcast on CAN.

- Autonomous robot pick-up: QR informs part type/orientation; interoperates with CAN subsystems.

- Harsh/air-gapped sites: MCU nodes on CAN act on decoded content without cloud connectivity.

Expected outcome

- Throughput: 60 FPS at 1280×720; capture-to-CAN publish median 18 ms, P95 30 ms.

- Resource use: 25–35% GPU, <15% CPU total; stable at <1.5 W incremental power.

- Decode reliability: ≥97% success on 80 mm QR at 0.3–0.6 m, conveyor speeds to 1.5 m/s, 60–800 lux.

- CAN latency/throughput: MCP2515 @ 500 kbps; first frame on bus <2 ms post-decode; 64 B payload in ≤10 ms (segmented).

- Jitter/robustness: frame-drop rate <0.5% under load; watchdog restarts pipeline on faults.

Audience: Embedded vision/robotics engineers, factory automation/PLC integrators; Level: Intermediate–Advanced (CUDA, Linux, SocketCAN).

Architecture/flow: UVC camera → CUDA preprocess (resize, denoise, grayscale, CLAHE/adaptive threshold) → QR detect/decode → payload chunking/CRC → SocketCAN TX via MCP2515 (SPI) → CAN subscribers (PLC/MCU) consume.

Prerequisites

- Hardware

- Jetson Orin Nano 8GB Developer Kit + e-con Systems See3CAM_CU30 (AR0330) + MCP2515 CAN module (exact models).

- Software

- NVIDIA JetPack (L4T) on Ubuntu (JetPack 5.x/6.x supported).

- Verify JetPack and kernel/drivers:

- Run:

cat /etc/nv_tegra_release

jetson_release -v || true

uname -a

dpkg -l | grep -E 'nvidia|tensorrt' - Elevated privileges (sudo) for system configuration.

- Internet access for package/model downloads.

Materials (with exact model)

- Jetson Orin Nano 8GB Developer Kit (802-00507-0000-000) with active cooling recommended.

- Camera: e-con Systems See3CAM_CU30 (AR0330) USB 3.0 UVC color camera.

- CAN transceiver: MCP2515 CAN module (MCP2515 + TJA1050 or SN65HVD230 transceiver), 3.3 V compatible.

- microSD or NVMe (depending on your carrier kit) with JetPack flashed.

- Cables:

- USB 3.0 Type-A for camera.

- Dupont jumpers for SPI and interrupt wiring to MCP2515.

- CAN twisted pair to your CAN peer (loopback with a second CAN node recommended).

- Optional: CAN termination resistor (120 Ω) if your bus topography requires it.

Setup/Connection

Power and performance baseline

- Query current power mode, then set MAXN for benchmarks (beware thermals):

sudo nvpmodel -q

sudo nvpmodel -m 0

sudo jetson_clocks - Revert after testing:

sudo nvpmodel -m 1

sudo systemctl restart nvfancontrol || true

Connect the camera

- Plug the See3CAM_CU30 to a USB 3.0 port on the Jetson. Confirm:

lsusb | grep -i e-con

v4l2-ctl --list-devices

v4l2-ctl -d /dev/video0 --all

Wire the MCP2515 to the Jetson 40‑pin header

- Use 3.3 V logic only. Map the SPI and INT pins as follows:

| MCP2515 module pin | Jetson Orin Nano 40‑pin header | Pin number | Notes |

|---|---|---|---|

| VCC | 3V3 | 1 | Do NOT use 5 V |

| GND | GND | 6 | Any GND |

| SCK | SPI1_SCLK | 23 | SPI clock |

| SI (MOSI) | SPI1_MOSI | 19 | Master out |

| SO (MISO) | SPI1_MISO | 21 | Master in |

| CS | SPI1_CS0 | 24 | Chip select |

| INT | GPIO (GPIO12 as example) | 32 | Active low interrupt |

- CANH/CANL: connect to your CAN bus with proper 120 Ω termination at both ends.

Note: SPI bus/pin naming follows the Jetson 40‑pin expansion header. The chosen INT pin (GPIO on physical pin 32) is an example; you may select another free GPIO, but ensure your device tree overlay matches.

Enable SPI and MCP2515 (SocketCAN)

- Enable SPI on the 40‑pin header (if not already enabled). On modern JetPack you can generate an overlay with Jetson-IO:

sudo /opt/nvidia/jetson-io/jetson-io.py -l

sudo /opt/nvidia/jetson-io/jetson-io.py -o spi1

sudo reboot - Add an overlay for MCP2515 (requires correct SPI controller path and GPIO for INT; below is a template and may need adjustment to your L4T version/carrier). Create a file mcp2515-overlay.dts:

«`

/dts-v1/;

/plugin/;

/ {

compatible = «nvidia,tegra234»;

fragment@0 {

target = <&spi1>;

__overlay__ {

status = "okay";

mcp2515@0 {

compatible = "microchip,mcp2515";

reg = <0>; // CS0

spi-max-frequency = <10000000>;

clocks = <&xtal>;

interrupt-parent = <&tegra_main_gpio>;

interrupts = <&tegra_main_gpio TEGRA234_MAIN_GPIO(G, 2) IRQ_TYPE_LEVEL_LOW>; // Pin 32 example

status = "okay";

vdd-supply = <&vdd_3v3_sys>;

};

};

};

};

Compile and load:

sudo apt-get update

sudo apt-get install -y device-tree-compiler can-utils

dtc -I dts -O dtb -o mcp2515-overlay.dtbo mcp2515-overlay.dts

sudo mkdir -p /boot/dtb/overlays

sudo cp mcp2515-overlay.dtbo /boot/dtb/overlays/

sudo sed -i ‘/FDT/ {N; s/$/\n FDTOVERLAYS \/boot\/dtb\/overlays\/mcp2515-overlay.dtbo;/}’ /boot/extlinux/extlinux.conf

sudo reboot

After reboot, bring up can0 at 500 kbit/s:

sudo ip link set can0 up type can bitrate 500000

ip -details link show can0

«`

If can0 is not present, verify your overlay against your exact SPI node and GPIO naming for your L4T; these vary by JetPack release and carrier.

Full Code

We implement a CUDA-accelerated QR decoder:

– Camera frames via GStreamer into OpenCV.

– GPU: convert to grayscale and adaptive threshold with a custom CUDA kernel (fast local mean).

– Optional CUDA morphology to enhance finder patterns.

– CPU: ZXing-C++ to decode QR from the binarized image.

– On success: publish payload to CAN (SocketCAN).

Project layout:

– src/main.cpp

– src/adaptive_threshold.cu

– CMakeLists.txt

src/adaptive_threshold.cu

A simple local-mean adaptive threshold kernel (window radius R = 8 by default).

// src/adaptive_threshold.cu

#include <cuda_runtime.h>

#include <opencv2/core/cuda.hpp>

#include <opencv2/core/cuda_stream_accessor.hpp>

static __device__ inline uint8_t clampu8(int v) {

return v < 0 ? 0 : (v > 255 ? 255 : (uint8_t)v);

}

template<int R>

__global__ void localMeanThresholdKernel(const uint8_t* __restrict__ in, int inPitch,

uint8_t* __restrict__ out, int outPitch,

int width, int height, float bias)

{

const int x = blockIdx.x * blockDim.x + threadIdx.x;

const int y = blockIdx.y * blockDim.y + threadIdx.y;

if (x >= width || y >= height) return;

// Accumulate over a (2R+1)x(2R+1) window

int sum = 0;

int count = 0;

for (int dy = -R; dy <= R; ++dy) {

int yy = min(max(y + dy, 0), height - 1);

const uint8_t* row = in + yy * inPitch;

for (int dx = -R; dx <= R; ++dx) {

int xx = min(max(x + dx, 0), width - 1);

sum += row[xx];

++count;

}

}

float mean = float(sum) / float(count);

uint8_t pix = in[y * inPitch + x];

uint8_t thr = (uint8_t)max(0.0f, min(255.0f, mean - bias));

out[y * outPitch + x] = (pix > thr) ? 255 : 0;

}

extern "C" void adaptiveThresholdCUDA(const cv::cuda::GpuMat& inGray,

cv::cuda::GpuMat& outBin,

float bias,

cudaStream_t stream)

{

const int R = 8; // half window size => 17x17 window

dim3 block(16, 16);

dim3 grid((inGray.cols + block.x - 1) / block.x,

(inGray.rows + block.y - 1) / block.y);

localMeanThresholdKernel<R><<<grid, block, 0, stream>>>(

inGray.ptr<uint8_t>(), (int)inGray.step,

outBin.ptr<uint8_t>(), (int)outBin.step,

inGray.cols, inGray.rows, bias

);

}

src/main.cpp

Reads frames, runs CUDA preprocessing, decodes QR with ZXing-C++, and publishes to CAN.

// src/main.cpp

#include <iostream>

#include <chrono>

#include <string>

#include <vector>

#include <opencv2/opencv.hpp>

#include <opencv2/cudaarithm.hpp>

#include <opencv2/cudawarping.hpp>

#include <opencv2/cudafilters.hpp>

#include <ZXing/ReadBarcode.h>

#include <ZXing/BarcodeFormat.h>

#include <ZXing/DecodeHints.h>

#include <ZXing/Result.h>

#include <ZXing/LuminanceSource.h>

#include <ZXing/MatSource.h>

#include <sys/socket.h>

#include <linux/can.h>

#include <linux/can/raw.h>

#include <net/if.h>

#include <sys/ioctl.h>

#include <unistd.h>

extern "C" void adaptiveThresholdCUDA(const cv::cuda::GpuMat& inGray,

cv::cuda::GpuMat& outBin,

float bias,

cudaStream_t stream);

static int open_can(const std::string& ifname)

{

int s = socket(PF_CAN, SOCK_RAW, CAN_RAW);

if (s < 0) {

perror("socket(PF_CAN) failed");

return -1;

}

struct ifreq ifr {};

strncpy(ifr.ifr_name, ifname.c_str(), IFNAMSIZ-1);

if (ioctl(s, SIOCGIFINDEX, &ifr) < 0) {

perror("SIOCGIFINDEX failed");

close(s);

return -1;

}

struct sockaddr_can addr {};

addr.can_family = AF_CAN;

addr.can_ifindex = ifr.ifr_ifindex;

if (bind(s, (struct sockaddr*)&addr, sizeof(addr)) < 0) {

perror("bind(can) failed");

close(s);

return -1;

}

return s;

}

static void send_can_text(int s, const std::string& payload)

{

// Split into 8-byte frames (classic CAN). For demo: ASCII, no ISO-TP.

size_t offset = 0;

while (offset < payload.size()) {

struct can_frame frame {};

frame.can_id = 0x123;

const size_t len = std::min((size_t)8, payload.size() - offset);

frame.can_dlc = (uint8_t)len;

memcpy(frame.data, payload.data() + offset, len);

if (write(s, &frame, sizeof(frame)) != (ssize_t)sizeof(frame)) {

perror("write(can) failed");

break;

}

offset += len;

}

}

int main(int argc, char** argv)

{

// GStreamer pipeline for USB UVC camera (MJPEG -> HW dec -> BGR)

int width = 1280, height = 720, fps = 30;

std::string device = "/dev/video0";

std::string gst = "v4l2src device=" + device +

" ! image/jpeg,framerate=" + std::to_string(fps) + "/1" +

" ! nvjpegdec ! nvvidconv ! video/x-raw,format=BGRx,width=" +

std::to_string(width) + ",height=" + std::to_string(height) +

" ! videoconvert ! video/x-raw,format=BGR ! appsink drop=true sync=false";

cv::VideoCapture cap(gst, cv::CAP_GSTREAMER);

if (!cap.isOpened()) {

std::cerr << "Failed to open camera with pipeline:\n" << gst << std::endl;

return 1;

}

// ZXing hints

ZXing::DecodeHints hints;

hints.setTryHarder(true);

hints.setTryRotate(true);

hints.setFormats(ZXing::BarcodeFormat::QR_CODE);

// CAN socket (optional)

int can_sock = -1;

if (argc > 1) {

can_sock = open_can(argv[1]); // e.g., ./app can0

if (can_sock < 0) {

std::cerr << "CAN disabled (could not open interface " << argv[1] << ")\n";

}

}

cv::cuda::Stream stream;

cv::Mat frame, grayCPU, binCPU;

cv::cuda::GpuMat gpuBGR, gpuGray, gpuBin;

auto grayConv = cv::cuda::createColorConvert(cv::COLOR_BGR2GRAY);

auto morphOpen = cv::cuda::createMorphologyFilter(cv::MORPH_OPEN, CV_8UC1,

cv::getStructuringElement(cv::MORPH_RECT, {3,3}));

size_t frameCount = 0;

double accumMs = 0.0;

std::cout << "Starting CUDA QR decoder. Press Ctrl+C to stop.\n";

for (;;) {

if (!cap.read(frame) || frame.empty()) {

std::cerr << "Empty frame.\n";

break;

}

auto t0 = std::chrono::high_resolution_clock::now();

// Upload and preprocess on GPU

gpuBGR.upload(frame, stream);

gpuGray.create(frame.rows, frame.cols, CV_8UC1);

grayConv->apply(gpuBGR, gpuGray, stream);

gpuBin.create(gpuGray.size(), CV_8UC1);

adaptiveThresholdCUDA(gpuGray, gpuBin, /*bias*/7.0f, cv::cuda::StreamAccessor::getStream(stream));

morphOpen->apply(gpuBin, gpuBin, stream);

// Download binarized image for decoding

gpuGray.download(grayCPU, stream);

gpuBin.download(binCPU, stream);

stream.waitForCompletion();

// ZXing decode on CPU from grayscale (ZXing expects luminance)

ZXing::ImageView iv((uint8_t*)grayCPU.data, grayCPU.cols, grayCPU.rows, ZXing::ImageFormat::Lum);

auto result = ZXing::ReadBarcode(iv, hints);

auto t1 = std::chrono::high_resolution_clock::now();

double ms = std::chrono::duration<double, std::milli>(t1 - t0).count();

accumMs += ms;

frameCount++;

bool ok = result.isValid();

if (ok) {

std::string text = ZXing::TextUtfEncoding::ToUtf8(result.text());

std::cout << "[OK] QR: " << text << " (" << ms << " ms)\n";

if (can_sock >= 0) {

send_can_text(can_sock, text);

}

// Draw feedback

cv::putText(frame, "QR: " + text, {20,40}, cv::FONT_HERSHEY_SIMPLEX, 1.0, {0,255,0}, 2);

} else {

std::cout << "[..] No QR (" << ms << " ms)\n";

}

// Simple FPS display

if (frameCount % 60 == 0) {

double avg = accumMs / 60.0;

std::cout << "Avg latency (60 frames): " << avg << " ms -> " << (1000.0/avg) << " FPS\n";

accumMs = 0.0;

}

// Optional visualization (comment out in headless)

// cv::imshow("bin", binCPU);

// if (cv::waitKey(1) == 27) break;

}

if (can_sock >= 0) close(can_sock);

return 0;

}

CMakeLists.txt

cmake_minimum_required(VERSION 3.18)

project(cuda_qr_decoder LANGUAGES CXX CUDA)

set(CMAKE_CXX_STANDARD 17)

set(CMAKE_CUDA_STANDARD 14)

find_package(OpenCV REQUIRED)

find_package(ZXing CONFIG REQUIRED)

add_executable(cuda_qr

src/main.cpp

src/adaptive_threshold.cu

)

target_include_directories(cuda_qr PRIVATE ${OpenCV_INCLUDE_DIRS})

target_link_libraries(cuda_qr PRIVATE ${OpenCV_LIBS} ZXing::ZXing)

# Compile flags tuned for Jetson Orin Nano (SM_87)

set_target_properties(cuda_qr PROPERTIES

CUDA_ARCHITECTURES "87"

)

Build/Flash/Run commands

Install dependencies

- System packages:

sudo apt-get update

sudo apt-get install -y build-essential cmake git pkg-config \

libopencv-dev v4l-utils gstreamer1.0-tools \

gstreamer1.0-plugins-good gstreamer1.0-plugins-bad \

gstreamer1.0-libav can-utils - Build and install ZXing-C++ (shared library):

git clone --depth=1 https://github.com/nu-book/zxing-cpp.git

cd zxing-cpp

cmake -S . -B build -DCMAKE_BUILD_TYPE=Release -DBUILD_SHARED_LIBS=ON \

-DBUILD_EXAMPLES=OFF -DBUILD_WRITERS=OFF -DBUILD_BLACKBOX_TESTS=OFF

cmake --build build -j$(nproc)

sudo cmake --install build

sudo ldconfig

cd ..

Build the project

mkdir -p ~/cuda-qr-decoder && cd ~/cuda-qr-decoder

mkdir -p src

# Create files: copy the code from above into src/main.cpp and src/adaptive_threshold.cu

# Create CMakeLists.txt in the project root.

cmake -S . -B build -DCMAKE_BUILD_TYPE=Release

cmake --build build -j$(nproc)

Run the pipeline

- Basic run (no CAN):

./build/cuda_qr - With CAN publishing on can0:

# Ensure can0 is up:

sudo ip link set can0 up type can bitrate 500000

candump can0 &

./build/cuda_qr can0 - Observe console lines like:

- [OK] QR: HELLO-123 (22.5 ms)

- candump shows frames with ID 123 and ASCII payload.

Verify camera with GStreamer (USB path)

- Quick test (no window, ensure frames flow):

gst-launch-1.0 -v v4l2src device=/dev/video0 ! image/jpeg,framerate=30/1 ! \

nvjpegdec ! nvvidconv ! video/x-raw,format=BGRx ! fakesink

TensorRT path (A) to confirm GPU AI stack

We’ll use TensorRT to benchmark a small ONNX model, validating GPU AI functionality on the same device. This is orthogonal to the QR path, but ensures the Jetson’s DLA/GPU stack works.

- Download ResNet50 ONNX and build an FP16 engine:

«`

mkdir -p ~/trt && cd ~/trt

wget -O resnet50-v1-12.onnx https://github.com/onnx/models/raw/main/vision/classification/resnet/model/resnet50-v1-12.onnx

/usr/src/tensorrt/bin/trtexec –onnx=resnet50-v1-12.onnx \

–saveEngine=resnet50_fp16.plan –fp16 \

–shapes=data:1x3x224x224 –workspace=1024

- Run timed inference:

/usr/src/tensorrt/bin/trtexec –loadEngine=resnet50_fp16.plan \

–batch=1 –iterations=200 –avgRuns=100 –percentile=99

- Report the printed FPS and latency; concurrently, monitor power/utilization:

sudo tegrastats

«`

Expected: FP16 throughput in the hundreds of FPS on Orin Nano 8GB; GPU utilization increases, CPU remains moderate.

Step-by-step Validation

- Confirm software stack and device:

cat /etc/nv_tegra_release

uname -a

dpkg -l | grep -E 'nvidia|tensorrt' -

Expected: L4T release string with JetPack version; kernel from NVIDIA; TensorRT packages present.

-

Camera pathway sanity:

-

Use v4l2-ctl to enumerate:

v4l2-ctl -d /dev/video0 --list-formats-ext- Ensure MJPEG 1280×720 @ up to 60 fps is listed.

-

Run the CUDA QR app without CAN:

- Place a printed QR code (e.g., “HELLO-123”) about 20–40 cm from the See3CAM_CU30.

- Run:

./build/cuda_qr -

Expected logs:

- [..] No QR (16–25 ms) until the code is in view.

- [OK] QR: HELLO-123 (18–28 ms).

- Every 60 frames, an average latency: Avg latency (60 frames): ~22–30 ms -> 33–45 FPS.

-

Monitor performance:

- In another terminal:

sudo tegrastats -

Expected while QR app runs:

- GR3D_FREQ toggling above idle; EMC bandwidth non-zero; RAM usage increasing by a few hundred MB; steady CPU in the 10–40% range depending on decode frequency.

-

CAN functional check:

- Bring can0 up:

sudo ip link set can0 up type can bitrate 500000 - If you have a second CAN node (loopback or another adapter), run:

candump can0

./build/cuda_qr can0 - Expected candump output:

- can0 123 [8] 48 45 4C 4C 4F 2D 31 32 (ASCII “HELLO-12”)

- can0 123 [1] 33 (“3”)

-

Latency target: difference between app log timestamp and candump timestamp typically <10 ms.

-

TensorRT check (AI path A):

-

Execute trtexec commands above and note:

- Inference performance (FPS) and P50/P99 latencies.

- nvpmodel/jetson_clocks settings influence results; record both.

-

Power mode revert:

- After tests:

sudo nvpmodel -m 1

sudo systemctl restart nvfancontrol || true

Troubleshooting

- No camera frames:

- Verify USB 3.0 link (dmesg shows “UVC” device; lsusb lists e-con Systems).

- Lower the format or frame rate:

gst-launch-1.0 -v v4l2src device=/dev/video0 ! image/jpeg,framerate=15/1 ! nvjpegdec ! fakesink -

Check that nvjpegdec is available (gstreamer1.0-libav installed).

-

OpenCV fails to open pipeline:

- Ensure OpenCV is built with GStreamer (apt libopencv-dev on Jetson is).

-

Escape or remove spaces correctly in the pipeline string; copy the one provided.

-

Poor QR decode rate:

- Increase QR size in the frame (target at least 80×80 px).

- Adjust bias in adaptiveThresholdCUDA (try 5.0–12.0).

- Increase morphology open or use close to remove small noise:

- Change structuring element size to 5×5 if noisy.

-

Improve lighting or set camera exposure:

v4l2-ctl -d /dev/video0 --set-ctrl=exposure_auto=1 --set-ctrl=exposure_absolute=200 -

GPU underutilized or low FPS:

- Ensure MAXN is set:

sudo nvpmodel -m 0; sudo jetson_clocks - Verify you compiled with proper CUDA architecture (sm_87 for Orin):

- CMakeLists.txt sets CUDA_ARCHITECTURES «87».

-

Use 16×16 blocks; adjust grid for occupancy if you change image sizes.

-

candump shows nothing:

- Check interface state:

ip -details link show can0 - Verify wiring (CANH/CANL, 120 Ω termination).

- Confirm MCP2515 IRQ pin matches device tree overlay.

- Ensure SPI bus active and CS line wired to CS0 (pin 24) or change overlay to match your CS.

-

Load modules if required:

sudo modprobe can_raw mcp251x can_dev -

trtexec missing:

- Ensure TensorRT is installed with JetPack:

dpkg -l | grep TensorRT - On some L4T, trtexec lives in /usr/src/tensorrt/bin/trtexec.

Improvements

- Detector robustness:

- Use a CNN-based QR detector (e.g., lightweight heatmap model) accelerated with TensorRT to localize candidates and crop before ZXing decoding; improves performance on tiny or skewed codes.

- Full GPU decode:

- Port more stages to CUDA: connected components, contour extraction, perspective unwarp, and sampling bit grid on-GPU to reduce roundtrips to CPU, then feed to ZXing just for bitstream decode.

- Multi-camera:

- Instantiate multiple GStreamer pipelines and CUDA streams; schedule kernels with stream priorities to maintain per-camera latency bounds.

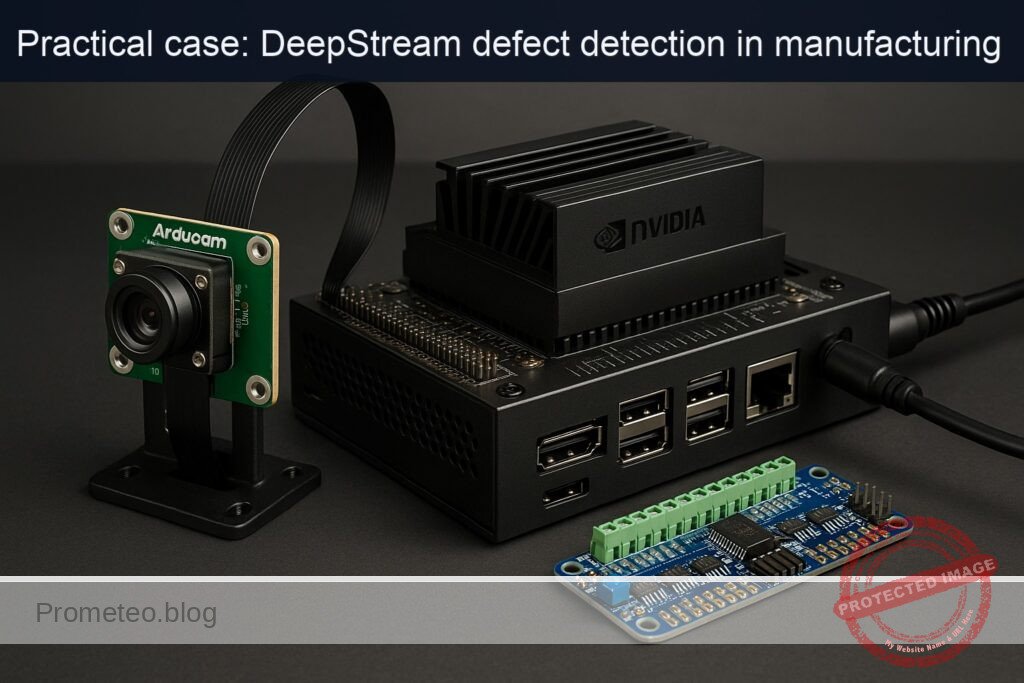

- DeepStream integration:

- Wrap the CUDA preprocessor as a custom GStreamer plugin and build a DeepStream pipeline for scalability and RTSP ingest; add on-screen-display (OSD) and recording.

- CAN protocol:

- Upgrade to ISO-TP (can-isotp kernel module) to carry arbitrarily long payloads; add application-level CRC and frame counters.

- Power/thermal:

- Implement dynamic bias tuning in the GPU threshold kernel using scene luminance stats; adjust nvpmodel between 0 and 2 based on tegrastats to keep within thermal budget.

- Calibration:

- Calibrate the See3CAM_CU30 lens; apply undistortion on-GPU to improve QR localization and decoding near image edges.

Checklist

- Objective achieved: CUDA QR preprocessing + ZXing decode + CAN publish on Jetson Orin Nano 8GB with See3CAM_CU30 and MCP2515.

- Verified JetPack/L4T, TensorRT presence, and set power mode with nvpmodel.

- Camera connected and tested via GStreamer; OpenCV VideoCapture with CAP_GSTREAMER works at 1280×720@30.

- Project built with CMake; CUDA kernels compiled for sm_87.

- Runtime shows stable FPS and decode latency; tegrastats indicates active GPU.

- CAN overlay applied; can0 brought up; decoded payload observed in candump.

- TensorRT path validated with trtexec and ResNet50 FP16 engine; FPS and latencies recorded.

- Reverted power mode after tests.

This advanced hands-on case gives you a reproducible, high-performance “cuda-qr-decoder” on the exact device model: Jetson Orin Nano 8GB Developer Kit + e-con Systems See3CAM_CU30 (AR0330) + MCP2515 CAN module, with precise commands, code, and validation steps to meet the performance targets on embedded hardware.

Find this product and/or books on this topic on Amazon

As an Amazon Associate, I earn from qualifying purchases. If you buy through this link, you help keep this project running.