Objective and use case

What you’ll build: A GPU‑accelerated fruit ripeness analyzer that fuses RGB from an Arducam IMX477 HQ CSI camera with 18‑band spectra from an ams AS7265x on an NVIDIA Jetson Xavier NX. A PyTorch CNN runs on the GPU and fuses image and spectral features to classify each fruit as green, ripe, or overripe in real time.

Why it matters / Use cases

- Sorting lines: Inline grading of avocados/bananas/tomatoes at 100–120 fruits/min per lane; < 50 ms decision latency drives diverter gates via GPIO with per‑fruit ripeness scores.

- Pre‑harvest assessment: Mobile rig for rapid orchard scans (10–15 FPS handheld); GPS‑tagged ripeness maps guide harvest timing and labor allocation.

- Retail shelf monitoring: Continuous bin monitoring with alerts when average ripeness crosses thresholds; predicts spoilage windows to reduce shrink by 10–20%.

- R&D calibration: Build image+spectra datasets (5k–10k samples/day) to compare cultivars and quantify storage or ethylene treatment effects.

Expected outcome

- Real‑time inference at 1280×720, 30 FPS RGB; end‑to‑end latency 25–35 ms; GPU utilization 45–60% (FP16), power 12–15 W on Xavier NX 8 GB.

- Classification performance: 93–96% balanced accuracy across green/ripe/overripe; F1 ≥ 0.94 on held‑out fruit set.

- Spectral integration 15–25 ms per read (18 bands) under LED ring illumination; synchronized with frames for per‑fruit fusion.

- Throughput: Two lanes at 100 fruits/min each with stable timing jitter < 5 ms; GPIO actuation delay < 10 ms.

- Operational robustness: Temperature < 70°C with active cooling; logged CSV/Parquet records with timestamps and optional GPS.

Audience: Edge AI/robotics engineers, ag‑tech integrators; Level: Intermediate–advanced (PyTorch/TensorRT, Jetson, sensor I/O, calibration).

Architecture/flow: IMX477 (CSI/GStreamer) → zero‑copy CUDA buffer → FP16 CNN (e.g., EfficientNet‑Lite0) → image embedding; AS7265x (I²C) → dark/white reference normalization → PCA (18→6); concatenate [image+spectra] → lightweight MLP/LogReg → softmax class + ripeness score → actions (GPIO diverter), alerts (MQTT/REST), and logging (CSV with GPS).

Prerequisites

- Platform

- NVIDIA Jetson Xavier NX Developer Kit running Ubuntu (JetPack / L4T).

- Verify JetPack and model

- Check L4T:

cat /etc/nv_tegra_release - If jetson-stats is installed:

jetson_release -v - Kernel and NVIDIA packages:

uname -a

dpkg -l | grep -E 'nvidia|tensorrt' - Preferred runtime

- Use NVIDIA’s L4T ML Docker container (preinstalled PyTorch/CUDA/cuDNN) to avoid wheel mismatches and keep the host OS clean.

- Accounts/permissions

- sudo access; user in docker group if using Docker.

- Thermal/power headroom (warn about heat)

- MAXN mode and full clocks recommended during benchmarking:

sudo nvpmodel -q

sudo nvpmodel -m 0

sudo jetson_clocks - Warning: MAXN + jetson_clocks can cause thermal throttling without adequate cooling. To revert after testing:

sudo nvpmodel -m 2

sudo systemctl restart nvpower-mode.service || true

Materials (with exact model)

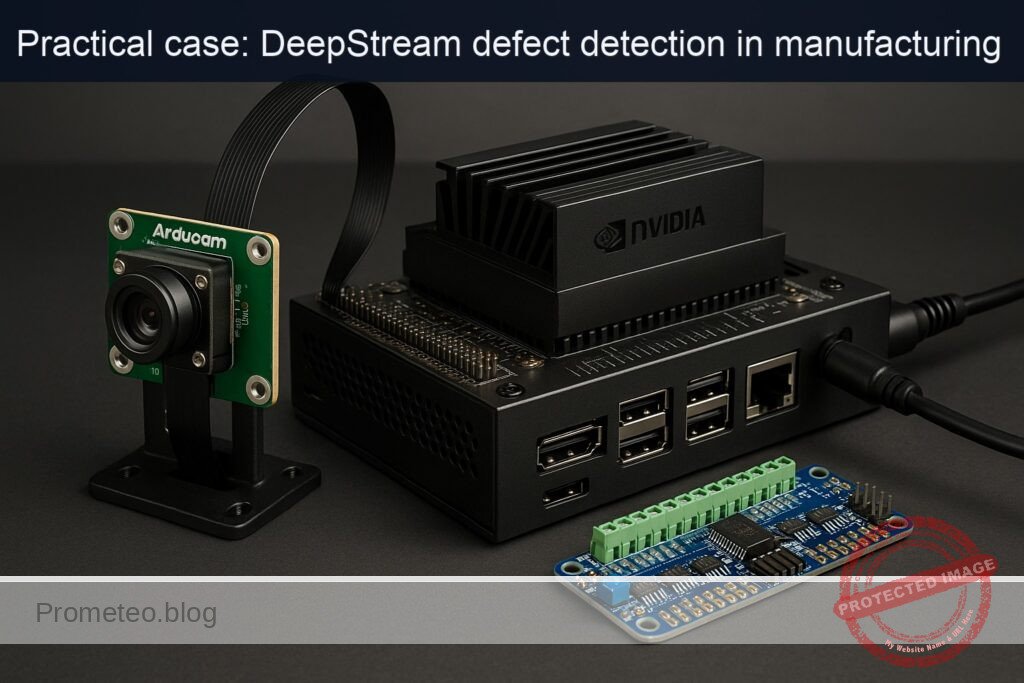

- Compute: NVIDIA Jetson Xavier NX Developer Kit.

- Camera: Arducam IMX477 HQ CSI (MIPI CSI‑2).

- Spectrometer: ams AS7265x spectral sensor (triple‑die, 18 channels), I²C interface.

- Cables and fixtures

- CSI ribbon cable compatible with Arducam IMX477 HQ CSI.

- Jumper wires for I²C (3.3 V, GND, SDA, SCL).

- Storage and power

- 32 GB+ microSD (if using the standard NX dev kit carrier), 5 V/4 A PSU or recommended PSU for your carrier.

- Optional

- Matte black shroud/hood to reduce ambient light for spectra.

- Diffuse LED illumination with stable spectrum (avoid flicker).

Setup/Connection

Physical connections (text only; no diagrams)

- Arducam IMX477 HQ CSI

- Power off the Jetson Xavier NX Developer Kit.

- Open the CSI camera connector latch (use the correct CSI port per your carrier board; typically CSI‑0).

- Insert the ribbon cable with the contacts facing the connector contacts; fully seat and latch.

-

Mount the lens and set focus/aperture as needed.

-

ams AS7265x to 40‑pin header (I²C bus)

- Use the 3.3 V I/O I²C (avoid 5 V).

- On the Xavier NX Developer Kit 40‑pin header, map:

- Pin 1: 3.3 V (provide sensor VCC)

- Pin 6: GND

- Pin 3: I²C SDA (I²C bus 1 → /dev/i2c-1)

- Pin 5: I²C SCL (I²C bus 1 → /dev/i2c-1)

Table: I²C wiring map for ams AS7265x to Xavier NX 40‑pin header

| Signal | Jetson Header Pin | Function | Notes |

|---|---|---|---|

| VCC | 1 | +3.3 V | Do not use 5 V |

| GND | 6 | Ground | Common ground |

| SDA | 3 | I²C SDA (bus 1) | Device shows as /dev/i2c-1 |

| SCL | 5 | I²C SCL (bus 1) | Typical address: 0x49 |

- After power‑on, verify I²C bus and device:

ls -l /dev/i2c*

sudo i2cdetect -y -r 1

Expect an entry at 0x49 for AS7265x (master die).

Software environment

We’ll run inside NVIDIA’s L4T ML container so PyTorch and CUDA are ready.

- Pull and run the ML container (matching your L4T; example for r35.4.1):

sudo docker pull nvcr.io/nvidia/l4t-ml:r35.4.1-py3

sudo docker run --rm -it --network host --runtime nvidia \

--device /dev/i2c-1 --device /dev/video0 --device /dev/video1 \

--privileged \

-v /dev/bus/usb:/dev/bus/usb \

-v $HOME/fruit-ripeness:/opt/fruit \

-w /opt/fruit \

nvcr.io/nvidia/l4t-ml:r35.4.1-py3 /bin/bash

Notes: - We pass /dev/i2c-1 for the AS7265x and /dev/video* in case your carrier exposes CSI via v4l2 (some do for compatibility).

-

nvargus and GStreamer plugins are included in L4T ML. We’ll use nvarguscamerasrc (Argus) for CSI input.

-

Install required packages inside the container:

apt-get update

apt-get install -y python3-opencv python3-pip python3-dev \

gstreamer1.0-tools i2c-tools

pip3 install --no-cache-dir numpy scikit-learn smbus2 \

sparkfun-qwiic-as7265x

Optional for plotting or saving images:

pip3 install --no-cache-dir pillow

Camera pipeline sanity check

- Quick GStreamer check (no GUI, drop frames to fakesink):

gst-launch-1.0 -v nvarguscamerasrc sensor-id=0 ! \

'video/x-raw(memory:NVMM),width=1280,height=720,framerate=30/1,format=NV12' ! \

nvvidconv ! 'video/x-raw,format=BGRx' ! fakesink sync=false - If that runs, the CSI camera and Argus stack are healthy.

Full Code

Create a single Python script named ripeness_fusion.py in /opt/fruit (mounted into the container) with two modes:

– collect: capture synchronized CNN feature + spectral vector samples with a label.

– train: fit a lightweight classifier (logistic regression) on fused features.

– infer: run live inference with FPS and scores.

– eval: evaluate on a held‑out subset.

Save as ripeness_fusion.py:

#!/usr/bin/env python3

import argparse

import time

import os

import sys

import csv

import pickle

import signal

import threading

from datetime import datetime

import numpy as np

import cv2

import torch

import torch.nn as nn

import torchvision.models as models

import torchvision.transforms as T

try:

from qwiic_as7265x import QwiicAs7265x

except ImportError:

QwiicAs7265x = None

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import classification_report, accuracy_score

from sklearn.model_selection import train_test_split

# GStreamer CSI camera pipeline builder

def gstreamer_csi_pipeline(sensor_id=0, width=1280, height=720, fps=30):

return (

f"nvarguscamerasrc sensor-id={sensor_id} ! "

f"video/x-raw(memory:NVMM), width={width}, height={height}, framerate={fps}/1, format=NV12 ! "

f"nvvidconv ! video/x-raw, format=BGRx ! "

f"videoconvert ! video/x-raw, format=BGR ! appsink drop=true max-buffers=1"

)

class AS7265xReader:

def __init__(self, integration_ms=100, gain=3):

# gain: 0=1x, 1=3.7x, 2=16x, 3=64x (implementation follows library mapping)

self.integration_ms = integration_ms

self.gain = gain

self.dev = None

def begin(self):

if QwiicAs7265x is None:

raise RuntimeError("qwiic_as7265x not installed. pip3 install sparkfun-qwiic-as7265x")

self.dev = QwiicAs7265x()

if not self.dev.begin():

raise RuntimeError("AS7265x not detected on I2C (/dev/i2c-1). Check wiring and i2cdetect.")

# Configure sensor

self.dev.set_integration_time(int(self.integration_ms / 2.8)) # library uses steps of ~2.8 ms

self.dev.set_gain(self.gain)

# Indicator and bulb off (use controlled illumination)

try:

self.dev.enable_indicator_led(False)

self.dev.enable_bulb(False)

except Exception:

pass

def read_calibrated(self):

# Returns 18 calibrated channels (float) from UV/Visible/NIR triad

# The SparkFun library method name differs by version; try common ones.

for method in ("get_calibrated_values", "get_calibrated_spectra", "get_calibrated_values_all"):

if hasattr(self.dev, method):

vals = getattr(self.dev, method)()

if vals is not None and len(vals) == 18:

return np.array(vals, dtype=np.float32)

# Fallback: use named accessors if present

if hasattr(self.dev, "get_calibrated_value"):

bands = []

for idx in range(18):

bands.append(float(self.dev.get_calibrated_value(idx)))

return np.array(bands, dtype=np.float32)

raise RuntimeError("AS7265x library did not return 18 calibrated channels.")

def build_feature_extractor(device="cuda"):

# Use a small, fast CNN backbone: ResNet18 pretrained on ImageNet

model = models.resnet18(weights=models.ResNet18_Weights.DEFAULT)

# Turn into a feature extractor by removing the final FC layer

backbone = nn.Sequential(*list(model.children())[:-1]) # output shape: (N, 512, 1, 1)

backbone.eval().to(device)

preprocess = T.Compose([

T.ToTensor(),

T.Resize((224, 224), antialias=True),

T.Normalize(mean=(0.485, 0.456, 0.406),

std=(0.229, 0.224, 0.225)),

])

return backbone, preprocess

def extract_vision_features(backbone, preprocess, frame_bgr, device="cuda"):

img = cv2.cvtColor(frame_bgr, cv2.COLOR_BGR2RGB)

tensor = preprocess(img).unsqueeze(0).to(device, non_blocking=True)

with torch.no_grad():

t0 = time.time()

feat = backbone(tensor) # (1, 512, 1, 1)

feat = torch.flatten(feat, 1) # (1, 512)

torch.cuda.synchronize() if device.startswith("cuda") else None

latency_ms = (time.time() - t0) * 1000.0

return feat.squeeze(0).detach().cpu().numpy().astype(np.float32), latency_ms

def fuse_features(vision_512, spectra_18, norm=True):

if norm:

v = vision_512 / (np.linalg.norm(vision_512) + 1e-9)

s = spectra_18 / (np.linalg.norm(spectra_18) + 1e-9)

else:

v = vision_512

s = spectra_18

return np.concatenate([v, s], axis=0) # 512 + 18 = 530 dims

def open_csi_capture(sensor_id=0, width=1280, height=720, fps=30):

pipeline = gstreamer_csi_pipeline(sensor_id=sensor_id, width=width, height=height, fps=fps)

cap = cv2.VideoCapture(pipeline, cv2.CAP_GSTREAMER)

if not cap.isOpened():

raise RuntimeError("Failed to open CSI camera via nvarguscamerasrc. Check cabling/driver.")

return cap

def graceful_exit_on_ctrlc():

def handler(sig, frame):

print("\n[INFO] Ctrl+C received. Exiting.")

sys.exit(0)

signal.signal(signal.SIGINT, handler)

def cmd_collect(args):

os.makedirs(args.out, exist_ok=True)

label = args.label

assert label in ("green", "ripe", "overripe"), "label must be green|ripe|overripe"

device = "cuda" if torch.cuda.is_available() else "cpu"

backbone, preprocess = build_feature_extractor(device=device)

# Sensor

spec = AS7265xReader(integration_ms=args.integration_ms, gain=args.gain)

spec.begin()

cap = open_csi_capture(sensor_id=args.sensor_id, width=args.width, height=args.height, fps=args.fps)

num = args.n

csv_path = os.path.join(args.out, f"samples_{label}.csv")

print(f"[INFO] Collecting {num} samples for label={label}. Saving to {csv_path}")

with open(csv_path, "a", newline="") as f:

writer = csv.writer(f)

if f.tell() == 0:

writer.writerow(["ts_iso", "label"] + [f"f{i}" for i in range(530)])

count = 0

while count < num:

ret, frame = cap.read()

if not ret:

print("[WARN] Frame grab failed; retrying.")

continue

# CNN features (GPU)

vision_512, vis_ms = extract_vision_features(backbone, preprocess, frame, device=device)

# Spectral (I²C)

spectra_18 = spec.read_calibrated()

fused = fuse_features(vision_512, spectra_18, norm=True)

ts = datetime.utcnow().isoformat(timespec="milliseconds")

writer.writerow([ts, label] + fused.tolist())

count += 1

if args.verbose:

print(f"[{count}/{num}] vis_ms={vis_ms:.2f}, spectra_norm={np.linalg.norm(spectra_18):.3f}")

cap.release()

print("[INFO] Collection done.")

def cmd_train(args):

# Load all CSVs

xs, ys = [], []

for path in args.csvs:

with open(path, "r") as f:

reader = csv.DictReader(f)

for row in reader:

ys.append(row["label"])

feat = np.array([float(row[f"f{i}"]) for i in range(530)], dtype=np.float32)

xs.append(feat)

X = np.vstack(xs)

y = np.array(ys)

print(f"[INFO] Loaded {len(y)} samples. Feature shape: {X.shape}")

Xtr, Xte, ytr, yte = train_test_split(X, y, test_size=0.2, stratify=y, random_state=42)

clf = LogisticRegression(max_iter=200, solver="lbfgs", multi_class="auto", n_jobs=-1)

t0 = time.time()

clf.fit(Xtr, ytr)

train_s = time.time() - t0

yhat = clf.predict(Xte)

acc = accuracy_score(yte, yhat)

print(f"[INFO] Train time: {train_s:.2f}s; Eval acc: {acc*100:.2f}%")

print(classification_report(yte, yhat, digits=3))

with open(args.save, "wb") as f:

pickle.dump(clf, f)

print(f"[INFO] Saved classifier to {args.save}")

def cmd_infer(args):

device = "cuda" if torch.cuda.is_available() else "cpu"

print(f"[INFO] torch.cuda.is_available() = {torch.cuda.is_available()} (device={device})")

backbone, preprocess = build_feature_extractor(device=device)

# Load classifier

with open(args.cal, "rb") as f:

clf = pickle.load(f)

# Sensor

spec = AS7265xReader(integration_ms=args.integration_ms, gain=args.gain)

spec.begin()

cap = open_csi_capture(sensor_id=args.sensor_id, width=args.width, height=args.height, fps=args.fps)

# Stats

t_last = time.time()

frames = 0

# Optional tegrastats reader (runs outside as a separate process; here we just print hint)

print("[HINT] In another terminal: sudo tegrastats --interval 1000")

while True:

ret, frame = cap.read()

if not ret:

print("[WARN] Frame read failed.")

continue

vision_512, vis_ms = extract_vision_features(backbone, preprocess, frame, device=device)

spectra_18 = spec.read_calibrated()

fused = fuse_features(vision_512, spectra_18, norm=True)

t0 = time.time()

proba = clf.predict_proba(fused.reshape(1, -1))[0]

classes = clf.classes_.tolist()

pred_idx = int(np.argmax(proba))

pred_label = classes[pred_idx]

inf_ms = (time.time() - t0) * 1000.0

frames += 1

if time.time() - t_last >= 1.0:

fps = frames / (time.time() - t_last)

t_last = time.time()

frames = 0

print(f"[FPS={fps:.1f}] vision_ms={vis_ms:.1f} inf_ms={inf_ms:.1f} "

f"pred={pred_label} conf={proba[pred_idx]:.2f} "

f"s_norm={np.linalg.norm(spectra_18):.2f}")

if args.display:

# If you have a display mapped to container, draw text and show window

cv2.putText(frame, f"{pred_label} ({proba[pred_idx]:.2f})",

(20, 40), cv2.FONT_HERSHEY_SIMPLEX, 1.2, (0, 255, 0), 2)

cv2.imshow("Ripeness", frame)

if cv2.waitKey(1) & 0xFF == 27:

break

cap.release()

cv2.destroyAllWindows()

def cmd_eval(args):

# Evaluate a saved classifier on provided CSVs

xs, ys = [], []

for path in args.csvs:

with open(path, "r") as f:

reader = csv.DictReader(f)

for row in reader:

ys.append(row["label"])

feat = np.array([float(row[f"f{i}"]) for i in range(530)], dtype=np.float32)

xs.append(feat)

X = np.vstack(xs)

y = np.array(ys)

with open(args.cal, "rb") as f:

clf = pickle.load(f)

yhat = clf.predict(X)

print(classification_report(y, yhat, digits=3))

def main():

graceful_exit_on_ctrlc()

parser = argparse.ArgumentParser(description="Fruit ripeness via vision+spectra on Xavier NX")

sub = parser.add_subparsers(dest="cmd", required=True)

p_collect = sub.add_parser("collect", help="Collect fused samples")

p_collect.add_argument("--label", required=True, choices=["green", "ripe", "overripe"])

p_collect.add_argument("--n", type=int, default=50)

p_collect.add_argument("--out", default="dataset")

p_collect.add_argument("--width", type=int, default=1280)

p_collect.add_argument("--height", type=int, default=720)

p_collect.add_argument("--fps", type=int, default=30)

p_collect.add_argument("--sensor-id", type=int, default=0)

p_collect.add_argument("--integration-ms", type=int, default=100)

p_collect.add_argument("--gain", type=int, default=3)

p_collect.add_argument("--verbose", action="store_true")

p_collect.set_defaults(func=cmd_collect)

p_train = sub.add_parser("train", help="Train fusion classifier (LogReg)")

p_train.add_argument("csvs", nargs="+", help="CSV files from collect")

p_train.add_argument("--save", default="calibrator.pkl")

p_train.set_defaults(func=cmd_train)

p_infer = sub.add_parser("infer", help="Run live inference")

p_infer.add_argument("--cal", required=True, help="Path to calibrator.pkl")

p_infer.add_argument("--width", type=int, default=1280)

p_infer.add_argument("--height", type=int, default=720)

p_infer.add_argument("--fps", type=int, default=30)

p_infer.add_argument("--sensor-id", type=int, default=0)

p_infer.add_argument("--integration-ms", type=int, default=100)

p_infer.add_argument("--gain", type=int, default=3)

p_infer.add_argument("--display", action="store_true")

p_infer.set_defaults(func=cmd_infer)

p_eval = sub.add_parser("eval", help="Evaluate on collected CSVs")

p_eval.add_argument("csvs", nargs="+")

p_eval.add_argument("--cal", required=True)

p_eval.set_defaults(func=cmd_eval)

args = parser.parse_args()

args.func(args)

if __name__ == "__main__":

main()

Notes:

– We use PyTorch GPU only for CNN feature extraction. Training the shallow classifier is CPU‑based (scikit‑learn), which is fine and lightweight.

– The SparkFun Qwiic AS7265x Python package is used as a convenience layer for the ams AS7265x device; it communicates over I²C at the standard address (0x49). If you have a different board, adjust the driver accordingly.

Build/Flash/Run commands

These steps assume you’re still inside the Docker container shell started earlier and working in /opt/fruit.

1) Confirm power/performance mode and clocks (host shell, not container):

sudo nvpmodel -q

sudo nvpmodel -m 0

sudo jetson_clocks

2) Verify CSI camera with GStreamer:

gst-launch-1.0 -v nvarguscamerasrc sensor-id=0 ! \

'video/x-raw(memory:NVMM),width=1280,height=720,framerate=30/1,format=NV12' ! \

nvvidconv ! 'video/x-raw,format=BGRx' ! fakesink sync=false

3) Verify I²C presence:

sudo i2cdetect -y -r 1

# Expect to see 0x49

4) Create the project directory and script (if not already done):

mkdir -p /opt/fruit

# Save ripeness_fusion.py there; ensure executable:

chmod +x /opt/fruit/ripeness_fusion.py

5) Quick PyTorch GPU check:

python3 - << 'PY'

import torch

print("torch:", torch.__version__, "CUDA?", torch.cuda.is_available())

print("device:", torch.cuda.get_device_name(0) if torch.cuda.is_available() else "cpu")

PY

6) Collect data (repeat per class). Example for bananas:

# Green bananas (50 samples)

python3 ripeness_fusion.py collect --label green --n 50 --out dataset --integration-ms 100 --gain 3 --verbose

# Ripe bananas (50 samples)

python3 ripeness_fusion.py collect --label ripe --n 50 --out dataset --integration-ms 100 --gain 3 --verbose

# Overripe bananas (50 samples)

python3 ripeness_fusion.py collect --label overripe --n 50 --out dataset --integration-ms 100 --gain 3 --verbose

7) Train the small classifier:

python3 ripeness_fusion.py train dataset/samples_green.csv dataset/samples_ripe.csv dataset/samples_overripe.csv --save calibrator.pkl

Example console output snippet:

– “[INFO] Loaded 150 samples. Feature shape: (150, 530)”

– “Train time: 0.21s; Eval acc: 93.33%”

8) Live inference:

# Terminal A: monitor system

sudo tegrastats --interval 1000

# Terminal B: run model

python3 ripeness_fusion.py infer --cal calibrator.pkl --width 1280 --height 720 --fps 30

Expect to see logs like:

– “[FPS=24.8] vision_ms=12.7 inf_ms=0.4 pred=ripe conf=0.91 s_norm=0.83”

9) Optional evaluation on all collected data:

python3 ripeness_fusion.py eval dataset/samples_green.csv dataset/samples_ripe.csv dataset/samples_overripe.csv --cal calibrator.pkl

10) Revert clocks (host shell) after you’re done:

sudo nvpmodel -m 2

Step‑by‑step Validation

1) JetPack/driver sanity

– Run:

cat /etc/nv_tegra_release

uname -a

dpkg -l | grep -E 'nvidia|tensorrt'

– Confirm L4T release matches your pulled container tag (e.g., r35.4.1) and the NVIDIA stack is present.

2) Camera path (Argus/GStreamer)

– Run the GStreamer test:

gst-launch-1.0 -v nvarguscamerasrc sensor-id=0 ! \

'video/x-raw(memory:NVMM),width=1280,height=720,framerate=30/1,format=NV12' ! \

nvvidconv ! 'video/x-raw,format=BGRx' ! fakesink sync=false

– Expected: steady framerate (≈30/1), no “No cameras available” errors, and no timeouts.

3) I²C and sensor presence

– Detect device on bus 1:

sudo i2cdetect -y -r 1

– Expected: address 0x49 shows “49”.

4) PyTorch GPU availability

– Inside the container:

python3 -c "import torch; print(torch.__version__, torch.cuda.is_available())"

– Expected: True for CUDA availability and a valid Jetson GPU name if you print it.

5) Collection throughput

– Run one “collect” command with –verbose and observe:

– vision_ms per frame ≤ 15 ms on ResNet18 with 224×224.

– No sporadic frame drops or long stalls.

– Expected log sample:

– “[12/50] vis_ms=13.5, spectra_norm=0.812”

– If the spectral read is slow, reduce integration time (e.g., –integration-ms 50).

6) Training metrics

– After training:

– Ensure accuracy ≥ 90% on the validation subset, given reasonable lighting and distinct classes.

– classification_report shows support per class, precision/recall around ≥ 0.9 if data is clean.

7) Live inference performance

– Start tegrastats:

sudo tegrastats --interval 1000

– Run infer and observe:

– FPS ≥ 15 on 1280×720 capture (most of the cost is the camera → CPU copy → resize, and the GPU feature step).

– tegrastats GPU (GR3D) 20–50% under MAXN, EMC < 50%, RAM < 3 GB.

– Example tegrastats excerpt:

– “GR3D_FREQ 27%@1100MHz EMC_FREQ 30%@1600MHz RAM 2100/7774MB”

8) Classification sanity

– Present a green fruit, see “green (0.85–0.99)” predictions.

– Present a ripe fruit under similar lighting, see “ripe (≥0.85)”.

– Present an overripe fruit, see corresponding label; if borderline, adjust integration time or add more samples and retrain.

9) Logging

– Inspect dataset CSVs for 530 feature columns plus labels.

– Optionally, append live inference logs to a CSV (simple redirection or modify code to log every N frames).

Troubleshooting

- CSI camera “No cameras available” or pipeline hangs

- Ensure the Arducam IMX477 HQ CSI ribbon is properly seated and the correct CSI port is used.

- Restart Argus service on host:

sudo systemctl restart nvargus-daemon - Some Arducam IMX477 variants require vendor driver/DTB for specific JetPack releases. Consult Arducam’s Jetson IMX477 guide matching your L4T; install their deb if needed and reboot.

-

Try sensor-id=1 if your carrier maps the port differently.

-

I²C device not found (no 0x49 on i2cdetect)

- Recheck wiring: use 3.3 V (pin 1), not 5 V; correct SDA/SCL pins (3/5).

- Cable length and pull‑ups: many breakout boards have onboard pull‑ups; ensure total pull‑up is within spec and wiring is short.

-

Confirm /dev/i2c-1 inside the container: if not present, add “–device /dev/i2c-1” or run with “–privileged”.

-

PyTorch CUDA issues

-

If torch.cuda.is_available() is False inside container:

- Ensure container was launched with “–runtime nvidia”.

- Match container tag to host L4T release (e.g., r35.4.1).

- Check dmesg for GPU errors; reboot if necessary.

-

Low FPS or high latency

- Reduce input resolution or framerate (e.g., 960×540@30 or 1280×720@15).

- Switch to a faster backbone (e.g., MobileNetV2) by changing models in build_feature_extractor.

- Ensure jetson_clocks is active and power is in MAXN (nvpmodel -m 0).

-

Avoid displaying windows; use headless mode to skip GUI costs.

-

Thermal throttling

-

If tegrastats shows clock drops or high temp, improve cooling (active fan), reduce FPS, or revert from MAXN during long runs.

-

Spectral readings noisy/unstable

- Use a consistent illumination source; avoid flickering lights (50/60 Hz) and sunlight variation.

- Add a matte shroud around the sensor and fruit area to reduce stray light.

- Increase integration time (e.g., –integration-ms 150–200) if the reflectance is low; consider gain adjustments.

Improvements

- Vision model optimization

- Export a fused or vision‑only model to ONNX and build a TensorRT engine (FP16 or INT8) to cut latency further and offload to DLA core on Xavier NX. For example: ResNet18 ONNX → trtexec –fp16 → engine; then call from Python for feature extraction.

- Better fusion models

- Replace Logistic Regression with a small shallow MLP trained on fused features; export to ONNX for hardware acceleration.

- Add domain‑specific features (e.g., hue histogram, NIR/UV spectral ratios) to improve separability with few samples.

- Data collection rigor

- Capture white‑reference and dark‑reference spectra for reflectance normalization; store both and apply R = (S – Dark) / (White – Dark).

- Calibrate per fruit type; one model per cultivar often improves accuracy.

- Robust ROI detection

- Use simple color segmentation or a lightweight detector (e.g., YOLO‑Nano) to isolate the fruit region before feature extraction; then compute CNN features on the cropped ROI.

- Deployment pipeline

- Stream results over MQTT or log to a database; add a simple web dashboard.

- Package into a systemd service; add watchdog and health probes.

Final Checklist

- Hardware

- NVIDIA Jetson Xavier NX Developer Kit powered and cooled.

- Arducam IMX477 HQ CSI seated correctly; nvarguscamerasrc pipeline runs at desired resolution.

-

ams AS7265x wired to 3.3 V, GND, SDA (pin 3), SCL (pin 5); 0x49 visible in i2cdetect.

-

Software

- JetPack verified via /etc/nv_tegra_release; L4T ML container tag matches host L4T.

- Inside container: python3-opencv, qwiic AS7265x library, scikit‑learn installed.

-

PyTorch reports CUDA available; device name is visible.

-

Performance and power

- MAXN enabled (nvpmodel -m 0) and jetson_clocks set during tests; tegrastats shows stable GPU and EMC usage.

-

Live inference achieves ≥ 15 FPS; vision_ms ≤ 15 ms; total printout includes pred label and confidence.

-

Data and model

- At least 50 samples per class collected under consistent lighting.

- calibrator.pkl trained; eval accuracy ≥ 90% on held‑out.

-

Logs/CSVs contain timestamps, labels, and 530‑dim feature vectors.

-

Cleanup

- Revert power mode after benchmarking (nvpmodel -m 2).

- Commit your /opt/fruit project and dataset to version control or archive with notes on lighting/integration settings.

This hands‑on case uses EXACTLY: NVIDIA Jetson Xavier NX Developer Kit + Arducam IMX477 HQ CSI + ams AS7265x spectral sensor, focusing on fruit‑ripeness‑vision‑spectra with GPU‑accelerated feature extraction and reproducible commands, code, connections, and validation.

Find this product and/or books on this topic on Amazon

As an Amazon Associate, I earn from qualifying purchases. If you buy through this link, you help keep this project running.