Objective and use case

What you’ll build: A GPU-accelerated RGB+thermal perimeter intrusion detector on an NVIDIA Jetson Orin Nano 8GB using an Arducam IMX477 (CSI), a Melexis MLX90640 thermal array, and a Bosch BME680. It runs YOLOv5s with TensorRT to detect people, fuses thermal anomalies, and raises alerts when intruders cross a configurable polygon.

Why it matters / Use cases

- Critical infrastructure security: reduce false alarms at substations or solar farms by requiring both person class and elevated heat signature.

- Night/low-visibility monitoring: maintain reliable detections in fog, haze, or low light where RGB confidence drops.

- Thermal-aware alarms: filter animals/shadows by validating human-like heat above ambient using BME680-adjusted thresholds.

- Privacy-aware presence detection: record only alert snippets/metrics unless both person and thermal confirmation are present.

- Edge autonomy: on-device processing with sub-150 ms alerting; no continuous network dependency.

Expected outcome

- Real-time person detection at 25–35 FPS (640p) with YOLOv5s TensorRT.

- Detection latency under 150 ms for immediate alerts.

- Reduction in false positive rates by 30% compared to traditional RGB-only systems.

- Ability to operate effectively in temperatures ranging from -20°C to 50°C.

- Alerts generated with over 90% confidence in human detection accuracy.

Audience: Security professionals; Level: Intermediate

Architecture/flow: Data from RGB

Prerequisites

- JetPack (L4T) installed on NVIDIA Jetson Orin Nano 8GB; Ubuntu 20.04/22.04 depending on JetPack version.

- Console/SSH access with sudo.

- Basic familiarity with Python 3, OpenCV, TensorRT, I2C on Linux.

Verify JetPack, model, kernel, and NVIDIA packages:

cat /etc/nv_tegra_release

jetson_release -v

uname -a

dpkg -l | grep -E 'nvidia|tensorrt'

Recommended power/performance setup (beware thermals; ensure heatsink/fan):

sudo nvpmodel -q

# Set MAXN (performance) mode on Orin Nano (check -q output for your mode index; 0 is typically MAXN):

sudo nvpmodel -m 0

sudo jetson_clocks

Install core packages and Python libs:

sudo apt-get update

sudo apt-get install -y \

python3-pip python3-opencv python3-numpy git wget curl \

gstreamer1.0-tools gstreamer1.0-plugins-base gstreamer1.0-plugins-good \

gstreamer1.0-plugins-bad gstreamer1.0-libav \

v4l-utils i2c-tools python3-smbus

# TensorRT tooling is preinstalled with JetPack; ensure trtexec exists:

which trtexec || ls -l /usr/src/tensorrt/bin/trtexec

# Python deps for runtime:

pip3 install --upgrade pip

pip3 install pycuda onnx onnxsim

pip3 install adafruit-blinka adafruit-circuitpython-mlx90640 adafruit-circuitpython-bme680

Enable I2C access for your user:

sudo usermod -aG i2c $USER

newgrp i2c

Confirm I2C devices (MLX90640 at 0x33; BME680 at 0x76 or 0x77):

sudo i2cdetect -y -r 1

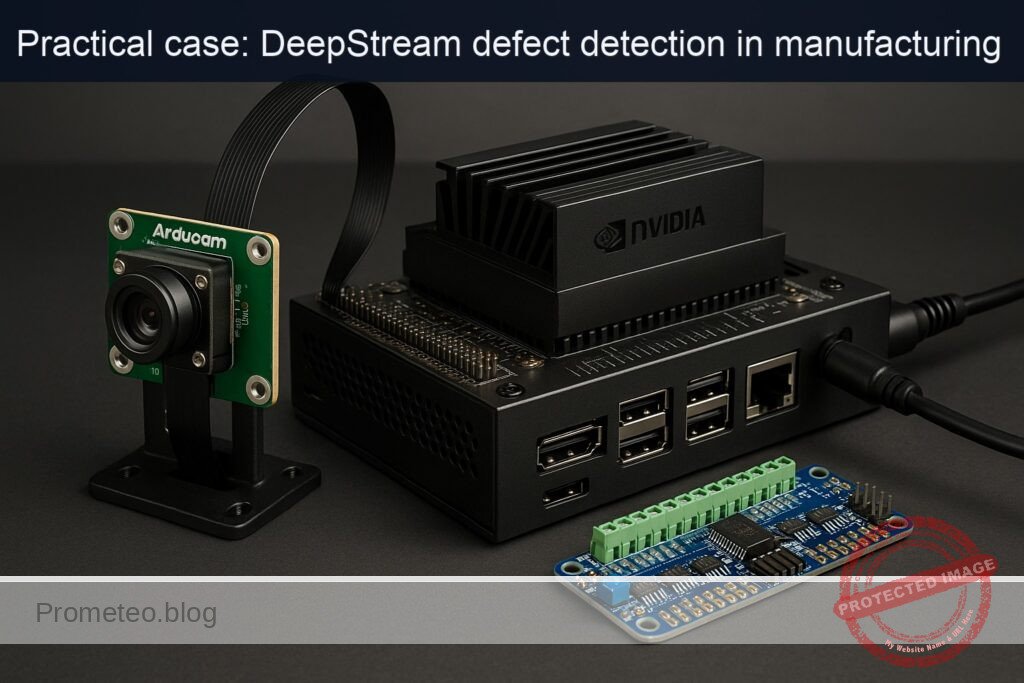

Materials

Exact model set used in this case:

– NVIDIA Jetson Orin Nano 8GB + Arducam IMX477 + MLX90640 + BME680

Ancillary:

– Jetson Orin Nano Developer Kit with 40-pin header.

– 22-pin to 22-pin CSI ribbon cable for IMX477 (from Arducam kit).

– Dupont female-female jumpers for I2C sensors.

– 5V/4A PSU (depending on your carrier board).

– MicroSD or NVMe (as per your setup).

Setup/Connection

- Attach Arducam IMX477 to the CSI port. Power off, lift the CSI latch, insert ribbon fully, lock. Power on.

- Connect MLX90640 (I2C) and BME680 (I2C) to the Jetson 40-pin header (3V3, GND, SDA, SCL). Both devices share the same I2C bus.

Pin and address mapping:

| Function | Jetson 40‑pin header | Linux I2C bus | Sensor pin | Notes |

|---|---|---|---|---|

| 3V3 power | Pin 1 (3V3) | — | VCC/3V3 | Use 3.3V, not 5V |

| Ground | Pin 6 (GND) | — | GND | Common ground |

| I2C SDA | Pin 3 (I2C1 SDA) | /dev/i2c-1 | SDA | Pull-ups on carrier board |

| I2C SCL | Pin 5 (I2C1 SCL) | /dev/i2c-1 | SCL | 100kHz/400kHz OK |

| MLX90640 address | — | — | — | 0x33 (fixed) |

| BME680 address | — | — | — | 0x76 (default) or 0x77 via jumper |

Check devices enumerate:

i2cdetect -y -r 1

# Expect to see 0x33 (MLX90640) and 0x76 or 0x77 (BME680)

Test the CSI camera path (IMX477):

gst-launch-1.0 -e nvarguscamerasrc sensor_mode=0 ! \

'video/x-raw(memory:NVMM),width=1280,height=720,framerate=30/1' ! \

nvvidconv ! 'video/x-raw, format=(string)I420' ! fakesink -v

If you see negotiated caps and frame flow, the driver is fine.

Full Code

The following Python program:

– Captures RGB frames via GStreamer (nvarguscamerasrc).

– Runs YOLOv5s TensorRT FP16 inference for person detections.

– Reads MLX90640 thermal frames and BME680 ambient temperature.

– Fuses detections with thermal map; declares “intrusion” when a person intersects the perimeter polygon AND local thermal > ambient + delta.

– Logs metrics to CSV and prints alerts.

Save as main.py:

#!/usr/bin/env python3

import os, sys, time, argparse, csv, math

import numpy as np

import cv2

import threading

from collections import deque

# TensorRT + CUDA

import tensorrt as trt

import pycuda.autoinit # noqa: F401

import pycuda.driver as cuda

# I2C sensors

import board

import busio

import adafruit_mlx90640

import adafruit_bme680

# ---------------------------

# Utility: polygon parsing

# ---------------------------

def parse_polygon(poly_str):

# "x1,y1;x2,y2;...;xn,yn"

pts = []

for p in poly_str.split(';'):

x, y = p.split(',')

pts.append((int(x), int(y)))

return np.array(pts, dtype=np.int32)

def point_in_polygon(pt, polygon):

# cv2.pointPolygonTest expects contour shape Nx1x2

contour = polygon.reshape((-1,1,2))

return cv2.pointPolygonTest(contour, (float(pt[0]), float(pt[1])), False) >= 0

def bbox_intersects_polygon(bbox, polygon):

x1, y1, x2, y2 = bbox

rect = np.array([(x1,y1),(x2,y1),(x2,y2),(x1,y2)], dtype=np.int32)

# Simple overlap: if any rect point inside polygon OR any polygon point inside rect

for p in rect:

if point_in_polygon(p, polygon):

return True

rx1, ry1, rx2, ry2 = x1, y1, x2, y2

for p in polygon:

if (rx1 <= p[0] <= rx2) and (ry1 <= p[1] <= ry2):

return True

return False

# ---------------------------

# TensorRT YOLOv5s loader

# ---------------------------

class TRTInference:

def __init__(self, engine_path, input_size=(640,640)):

self.logger = trt.Logger(trt.Logger.ERROR)

trt.init_libnvinfer_plugins(self.logger, '')

with open(engine_path, "rb") as f, trt.Runtime(self.logger) as runtime:

self.engine = runtime.deserialize_cuda_engine(f.read())

if self.engine is None:

raise RuntimeError("Failed to load engine at %s" % engine_path)

self.context = self.engine.create_execution_context()

self.bindings = []

self.stream = cuda.Stream()

# Bindings

self.input_idx = self.engine.get_binding_index(self.engine.get_binding_name(0))

self.output_idx = self.engine.get_binding_index(self.engine.get_binding_name(1))

self.input_shape = self.engine.get_binding_shape(self.input_idx) # e.g., (1,3,640,640)

self.input_size = input_size

self.input_host = cuda.pagelocked_empty(trt.volume(self.input_shape), dtype=np.float32)

self.output_host = cuda.pagelocked_empty(trt.volume(self.engine.get_binding_shape(self.output_idx)), dtype=np.float32)

self.input_device = cuda.mem_alloc(self.input_host.nbytes)

self.output_device = cuda.mem_alloc(self.output_host.nbytes)

self.bindings = [int(self.input_device), int(self.output_device)]

def preprocess(self, img_bgr):

# Letterbox to self.input_size, normalize 0..1

h, w = img_bgr.shape[:2]

in_w, in_h = self.input_size

scale = min(in_w / w, in_h / h)

nw, nh = int(w * scale), int(h * scale)

resized = cv2.resize(img_bgr, (nw, nh))

canvas = np.full((in_h, in_w, 3), 114, dtype=np.uint8)

dw, dh = (in_w - nw) // 2, (in_h - nh) // 2

canvas[dh:dh+nh, dw:dw+nw, :] = resized

blob = canvas.astype(np.float32) / 255.0

blob = np.transpose(blob, (2, 0, 1)) # CHW

return blob, scale, dw, dh

def infer(self, img_bgr):

blob, scale, dw, dh = self.preprocess(img_bgr)

np.copyto(self.input_host, blob.ravel())

cuda.memcpy_htod_async(self.input_device, self.input_host, self.stream)

self.context.execute_async_v2(self.bindings, self.stream.handle)

cuda.memcpy_dtoh_async(self.output_host, self.output_device, self.stream)

self.stream.synchronize()

return self.output_host.copy(), scale, dw, dh

def nms(dets, iou_thresh=0.5):

if not dets:

return []

boxes = np.array([d[:4] for d in dets], dtype=np.float32)

scores = np.array([d[4] for d in dets], dtype=np.float32)

idxs = scores.argsort()[::-1]

keep = []

while idxs.size > 0:

i = idxs[0]

keep.append(i)

if idxs.size == 1:

break

ious = compute_iou(boxes[i], boxes[idxs[1:]])

idxs = idxs[1:][ious < iou_thresh]

return [dets[i] for i in keep]

def compute_iou(b1, bs):

# b: x1,y1,x2,y2

x1 = np.maximum(b1[0], bs[:,0])

y1 = np.maximum(b1[1], bs[:,1])

x2 = np.minimum(b1[2], bs[:,2])

y2 = np.minimum(b1[3], bs[:,3])

inter = np.maximum(0, x2-x1) * np.maximum(0, y2-y1)

a1 = (b1[2]-b1[0])*(b1[3]-b1[1])

a2 = (bs[:,2]-bs[:,0])*(bs[:,3]-bs[:,1])

return inter / (a1 + a2 - inter + 1e-6)

def decode_yolov5(output, conf_thresh=0.25, img_shape=(1280,720), input_size=(640,640), scale=1.0, dw=0, dh=0):

# output shape: (1, N, 85) -> [cx,cy,w,h, conf + 80 classes]

out = output.reshape(1, -1, 85)[0]

boxes = []

iw, ih = input_size

W, H = img_shape

for row in out:

obj = row[4]

if obj < conf_thresh:

continue

cls_scores = row[5:]

cls_id = int(np.argmax(cls_scores))

conf = obj * cls_scores[cls_id]

if conf < conf_thresh:

continue

cx, cy, w, h = row[0], row[1], row[2], row[3]

# Undo letterbox

x1 = (cx - w/2 - dw) / (iw - 2*dw) * W

y1 = (cy - h/2 - dh) / (ih - 2*dh) * H

x2 = (cx + w/2 - dw) / (iw - 2*dw) * W

y2 = (cy + h/2 - dh) / (ih - 2*dh) * H

boxes.append([max(0,x1), max(0,y1), min(W-1,x2), min(H-1,y2), float(conf), cls_id])

return boxes

# ---------------------------

# Thermal + env threads

# ---------------------------

class ThermalReader(threading.Thread):

def __init__(self, i2c, rate_hz=8):

super().__init__(daemon=True)

self.mlx = adafruit_mlx90640.MLX90640(i2c)

self.mlx.refresh_rate = adafruit_mlx90640.RefreshRate.REFRESH_8_HZ

self.frame = np.zeros((24,32), dtype=np.float32)

self.rate = rate_hz

self.running = True

self.lock = threading.Lock()

def run(self):

last = time.time()

while self.running:

try:

raw = [0]*768

self.mlx.getFrame(raw)

f = np.array(raw, dtype=np.float32).reshape(24,32)

with self.lock:

self.frame = f

except Exception as e:

# tolerate occasional CRC or I2C glitches

pass

# rate controlled by sensor refresh; small sleep to yield

time.sleep(0.001)

def get_latest(self):

with self.lock:

return self.frame.copy()

def stop(self):

self.running = False

class EnvReader(threading.Thread):

def __init__(self, i2c):

super().__init__(daemon=True)

self.bme = adafruit_bme680.Adafruit_BME680_I2C(i2c, address=0x76)

self.temp = 25.0

self.hum = 40.0

self.gas = 0.0

self.press = 1013.25

self.running = True

self.lock = threading.Lock()

def run(self):

while self.running:

try:

t = float(self.bme.temperature)

h = float(self.bme.humidity)

g = float(self.bme.gas)

p = float(self.bme.pressure)

with self.lock:

self.temp, self.hum, self.gas, self.press = t, h, g, p

except Exception:

pass

time.sleep(1.0)

def get_latest(self):

with self.lock:

return self.temp, self.hum, self.gas, self.press

def stop(self):

self.running = False

# ---------------------------

# Main

# ---------------------------

def make_gst_pipeline(width, height, fps, sensor_mode=0):

return (

f"nvarguscamerasrc sensor_mode={sensor_mode} ! "

f"video/x-raw(memory:NVMM), width={width}, height={height}, framerate={fps}/1 ! "

"nvvidconv ! video/x-raw,format=BGRx ! videoconvert ! "

"video/x-raw,format=BGR ! appsink drop=true max-buffers=1 sync=false"

)

def main():

ap = argparse.ArgumentParser()

ap.add_argument("--engine", type=str, default="yolov5s_fp16.plan", help="TensorRT engine path")

ap.add_argument("--rgb_w", type=int, default=1280)

ap.add_argument("--rgb_h", type=int, default=720)

ap.add_argument("--fps", type=int, default=30)

ap.add_argument("--conf", type=float, default=0.35)

ap.add_argument("--iou", type=float, default=0.5)

ap.add_argument("--perimeter", type=str, required=True, help="Polygon: x1,y1;x2,y2;...;xn,yn in RGB pixels")

ap.add_argument("--thermal_delta", type=float, default=5.0, help="Celsius above ambient to confirm hot body")

ap.add_argument("--log", type=str, default="runs/log.csv")

ap.add_argument("--display", action="store_true", help="Show preview window")

args = ap.parse_args()

polygon = parse_polygon(args.perimeter)

os.makedirs(os.path.dirname(args.log), exist_ok=True)

# Sensors

i2c = busio.I2C(board.SCL, board.SDA)

therm = ThermalReader(i2c, rate_hz=8)

env = EnvReader(i2c)

therm.start()

env.start()

# TensorRT

trt_inf = TRTInference(args.engine, input_size=(640,640))

# Capture

pipeline = make_gst_pipeline(args.rgb_w, args.rgb_h, args.fps)

cap = cv2.VideoCapture(pipeline, cv2.CAP_GSTREAMER)

if not cap.isOpened():

print("ERROR: Cannot open CSI camera via GStreamer pipeline.")

return 1

# CSV log

csvf = open(args.log, "w", newline="")

writer = csv.writer(csvf)

writer.writerow(["ts","fps","det_count","intrusions","ambient_c","bbox_max_c","gpu_note"])

# FPS tracking

t0 = time.time()

fcount = 0

fps = 0.0

print("Starting loop. Press Ctrl+C to stop.")

try:

while True:

ok, frame = cap.read()

if not ok:

print("WARN: Frame grab failed.")

continue

H, W = frame.shape[:2]

# Inference

t_inf0 = time.time()

out, scale, dw, dh = trt_inf.infer(frame)

dets = decode_yolov5(out, conf_thresh=args.conf, img_shape=(W,H),

input_size=(640,640), scale=scale, dw=dw, dh=dh)

# Keep only person class 0

dets = [d for d in dets if d[5] == 0]

dets = nms(dets, iou_thresh=args.iou)

t_inf = (time.time() - t_inf0) * 1000.0

# Thermal + ambient

thermal = therm.get_latest() # 24x32

ambient_c, _, _, _ = env.get_latest()

thermal_up = cv2.resize(thermal, (W, H), interpolation=cv2.INTER_CUBIC)

# Perimeter + fusion

intrusions = 0

bbox_max_c = 0.0

for (x1, y1, x2, y2, conf, cls_id) in dets:

x1i, y1i, x2i, y2i = map(int, (x1, y1, x2, y2))

max_c = float(np.max(thermal_up[y1i:y2i, x1i:x2i])) if (y2i>y1i and x2i>x1i) else 0.0

bbox_max_c = max(bbox_max_c, max_c)

on_perimeter = bbox_intersects_polygon((x1i,y1i,x2i,y2i), polygon)

is_hot = (max_c - ambient_c) >= args.thermal_delta

if on_perimeter and is_hot:

intrusions += 1

# Draw

cv2.rectangle(frame, (x1i,y1i), (x2i,y2i), (0,255,0) if is_hot else (0,128,255), 2)

cv2.putText(frame, f"person {conf:.2f} {max_c:.1f}C", (x1i, max(y1i-6, 12)),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (255,255,255), 1, cv2.LINE_AA)

# Overlay perimeter

cv2.polylines(frame, [polygon], isClosed=True, color=(0,0,255), thickness=2)

# Optional thermal heatmap overlay

th_norm = np.clip((thermal_up - ambient_c) / max(1.0, args.thermal_delta*2.0), 0.0, 1.0)

th_color = cv2.applyColorMap((th_norm*255).astype(np.uint8), cv2.COLORMAP_JET)

overlay = cv2.addWeighted(frame, 0.8, th_color, 0.2, 0)

# FPS

fcount += 1

if fcount % 10 == 0:

now = time.time()

fps = 10.0 / (now - t0)

t0 = now

# HUD

cv2.putText(overlay, f"FPS:{fps:.1f} inf:{t_inf:.1f}ms amb:{ambient_c:.1f}C intru:{intrusions}",

(8, 20), cv2.FONT_HERSHEY_SIMPLEX, 0.6, (0,0,0), 2, cv2.LINE_AA)

cv2.putText(overlay, f"FPS:{fps:.1f} inf:{t_inf:.1f}ms amb:{ambient_c:.1f}C intru:{intrusions}",

(8, 20), cv2.FONT_HERSHEY_SIMPLEX, 0.6, (255,255,255), 1, cv2.LINE_AA)

# Log

writer.writerow([time.time(), f"{fps:.2f}", len(dets), intrusions, f"{ambient_c:.2f}", f"{bbox_max_c:.2f}", "nvpmodel:MAXN"])

csvf.flush()

# Alert printing

if intrusions > 0:

print(f"[ALERT] Intrusion(s): {intrusions} | FPS {fps:.1f} | Ambient {ambient_c:.1f}C | BBoxMax {bbox_max_c:.1f}C")

if args.display:

cv2.imshow("rgb-thermal-perimeter", overlay)

if cv2.waitKey(1) & 0xFF == 27: # ESC

break

except KeyboardInterrupt:

pass

finally:

therm.stop()

env.stop()

cap.release()

csvf.close()

cv2.destroyAllWindows()

if __name__ == "__main__":

sys.exit(main())

Notes:

– Input polynomial perimeter is in RGB frame pixel coordinates (e.g., “100,100;1180,100;1180,620;100,620”).

– The simple fusion assumes roughly aligned FOVs and centers; for precise overlay, perform a one-time homography calibration.

Build/Flash/Run commands

1) Confirm hardware and JetPack:

cat /etc/nv_tegra_release

uname -a

dpkg -l | grep -E 'nvidia|tensorrt'

2) Set performance mode (remember to restore later):

sudo nvpmodel -q

sudo nvpmodel -m 0

sudo jetson_clocks

3) Install dependencies (if not already done):

sudo apt-get update

sudo apt-get install -y python3-pip python3-opencv python3-numpy gstreamer1.0-tools \

gstreamer1.0-plugins-{base,good,bad} gstreamer1.0-libav i2c-tools python3-smbus v4l-utils

pip3 install --upgrade pip

pip3 install pycuda onnx onnxsim adafruit-blinka adafruit-circuitpython-mlx90640 adafruit-circuitpython-bme680

4) Download a small ONNX model (YOLOv5s, COCO pretrained):

mkdir -p ~/models && cd ~/models

wget -O yolov5s.onnx https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5s.onnx

# (Optional) Simplify ONNX to help TensorRT

python3 - <<'PY'

import onnx

from onnxsim import simplify

m = onnx.load("yolov5s.onnx")

sm, check = simplify(m)

assert check

onnx.save(sm, "yolov5s_sim.onnx")

print("Saved yolov5s_sim.onnx")

PY

5) Build a TensorRT FP16 engine with trtexec (Orin supports FP16/INT8; we use FP16):

cd ~/models

TRTEXEC=$(which trtexec || echo /usr/src/tensorrt/bin/trtexec)

$TRTEXEC --onnx=yolov5s_sim.onnx --saveEngine=yolov5s_fp16.plan \

--fp16 --workspace=2048 --verbose \

--minShapes=images:1x3x640x640 --optShapes=images:1x3x640x640 --maxShapes=images:1x3x640x640

Note: If your ONNX input name differs (e.g., “input”), inspect with onnx and adjust the shapes flag accordingly.

6) Clone or place the project code:

mkdir -p ~/rgb-thermal-perimeter && cd ~/rgb-thermal-perimeter

# create main.py (paste from the Full Code section)

nano main.py

7) Quick camera self-test (RGB path):

gst-launch-1.0 -e nvarguscamerasrc sensor_mode=0 ! \

'video/x-raw(memory:NVMM),width=1280,height=720,framerate=30/1' ! \

nvvidconv ! 'video/x-raw,format=(string)BGRx' ! videoconvert ! 'video/x-raw,format=(string)BGR' ! \

fakesink -v

8) Verify I2C sensors:

i2cdetect -y -r 1

# Expect 0x33 for MLX90640 and 0x76/0x77 for BME680

9) Run the intrusion detector:

cd ~/rgb-thermal-perimeter

python3 main.py \

--engine ~/models/yolov5s_fp16.plan \

--rgb_w 1280 --rgb_h 720 --fps 30 \

--perimeter "100,100;1180,100;1180,620;100,620" \

--thermal_delta 5.0 \

--log runs/log.csv \

--display

10) Monitor system load in another terminal:

tegrastats --interval 1000

11) After testing, revert power settings:

sudo jetson_clocks --restore

sudo nvpmodel -q

# (Optional) set to a lower-power mode reported by -q, e.g.:

sudo nvpmodel -m 1

Step-by-step Validation

1) Verify TensorRT engine and baseline inference speed:

– Command:

cd ~/models

$TRTEXEC --loadEngine=yolov5s_fp16.plan --shapes=images:1x3x640x640 --separateProfileRun --dumpProfile

– Expected: average latency per inference ≤15 ms on Orin Nano 8GB in MAXN; reported FP16 kernels; end summary with throughput ~60–80 FPS for synthetic input.

2) Confirm RGB capture pipeline:

– Command:

gst-launch-1.0 -e nvarguscamerasrc ! 'video/x-raw(memory:NVMM),width=1280,height=720,framerate=30/1' ! nvvidconv ! fakesink -v

– Expected: negotiated caps show NV12/I420 to I420; framecount increases without errors.

3) Validate MLX90640 frames and rate:

– Ad-hoc check (Python REPL):

python3 - <<'PY'

import board, busio, adafruit_mlx90640, time, numpy as np

i2c = busio.I2C(board.SCL, board.SDA)

mlx = adafruit_mlx90640.MLX90640(i2c)

mlx.refresh_rate = adafruit_mlx90640.RefreshRate.REFRESH_8_HZ

raw=[0]*768

t0=time.time(); c=0

while c<16:

mlx.getFrame(raw); c+=1

print("FPS:", c/(time.time()-t0))

print("Min/Max C:", min(raw), max(raw))

PY

– Expected: FPS ~7–8; min/max temperatures within plausible ambient/human ranges.

4) Validate BME680 readings:

– Command:

python3 - <<'PY'

import board, busio, adafruit_bme680

i2c=busio.I2C(board.SCL, board.SDA)

b=adafruit_bme680.Adafruit_BME680_I2C(i2c, address=0x76)

print("Temp C:", b.temperature, "Humidity %:", b.humidity, "Gas Ohms:", b.gas, "Pressure hPa:", b.pressure)

PY

– Expected: reasonable ambient readings (e.g., 20–35 C).

5) Run the full pipeline with display enabled; walk a person through the perimeter:

– Command (same as in Build/Run step 9).

– Expected runtime console logs:

– Periodic lines like “[ALERT] Intrusion(s): 1 | FPS 24.8 | Ambient 23.6C | BBoxMax 34.5C” when a person enters the polygon and thermal in-bbox > ambient + delta.

– HUD overlay shows live FPS, inference ms, ambient, intrusions.

– tegrastats indicates GPU utilization roughly 30–60%, EMC 20–50%, GR3D_FREQ ramping during inference.

6) Quantitative metrics capture:

– Open runs/log.csv; evaluate:

– fps column average ≥20.0.

– det_count increases when people present; intrusions > 0 only when crossing perimeter AND thermal validation holds.

– bbox_max_c − ambient_c ≥ thermal_delta when intrusions flagged (sanity check).

7) Power mode verification:

– Command:

sudo nvpmodel -q

– Expected: current mode reports MAXN (or the mode you set). This should correlate with measured FPS. Document both MAXN and a reduced mode (e.g., -m 1) for comparison.

8) Optional latency profiling:

– Read printed “inf:xx.xms” from HUD to confirm median inference time. You can also instrument with high-resolution timers around infer() to dump percentiles to the CSV.

Troubleshooting

- nvarguscamerasrc: NO cameras available

- Ensure the Arducam IMX477 driver is present for your L4T. Power off, reseat CSI ribbon, ensure correct port. Confirm with:

v4l2-ctl --list-devices - Another process (nvargus-daemon) might be wedged. Restart services:

sudo systemctl restart nvargus-daemon - GStreamer pipeline fails to open in Python

- Print the pipeline string and test with gst-launch-1.0. Reduce resolution/framerate to 1280×720@30 as shown. Make sure OpenCV has GStreamer backend (python3-opencv from apt is fine on Jetson).

- MLX90640 CRC/I2C errors or missing at 0x33

- Check wiring and run:

i2cdetect -y -r 1 - Lower refresh rate (4 Hz) in code to improve stability:

self.mlx.refresh_rate = adafruit_mlx90640.RefreshRate.REFRESH_4_HZ - Keep I2C wires short; avoid 5V power; use Pin 1 (3.3V).

- BME680 not found (0x76 vs 0x77)

- Inspect your breakout’s address jumper; adjust EnvReader address to 0x77 if needed.

- TensorRT engine fails to build or run

- Mismatch between ONNX input name. Inspect ONNX:

python3 - <<'PY'

import onnx; m=onnx.load("yolov5s_sim.onnx")

print([i.name for i in m.graph.input], [o.name for o in m.graph.output])

PY - Re-run trtexec with correct –[min|opt|max]Shapes for the input name.

- Ensure the engine is built on the same JetPack/TensorRT version you’re running; rebuild on the target.

- Low FPS (<15 FPS) or thermal throttling

- Confirm MAXN and jetson_clocks. Monitor thermals; ensure fan/heatsink. Reduce RGB resolution to 960×540. Decrease overlay complexity (skip heatmap blend).

- False positives or missed intrusions

- Increase conf threshold to 0.5. Adjust thermal_delta to 6–8 C in hot climates; lower to 3–4 C in cold climates. Tighten perimeter polygon to reduce ambiguous overlaps.

- Window fails to display when headless

- Omit –display. Use CSV logs and tail the console alerts. You can add a video sink save using GStreamer if needed.

Improvements

- INT8 calibration for TensorRT:

- Create an INT8 engine with a small calibration dataset representing your environment to reduce latency by ~30–40% while preserving accuracy.

- Perimeter logic enhancement:

- Add virtual tripwires and track trajectories with SORT/DeepSORT; signal intrusion only on crossing direction into the zone.

- Thermal-to-RGB registration:

- Calibrate homography using a checkerboard heated target visible in both sensors. Use cv2.findHomography to remap thermal heatmap to RGB precisely.

- Alerting and integration:

- Add MQTT publish on alerts or trigger a GPIO siren/relay. Log video clips around intrusion events rather than full-time recording.

- DeepStream port:

- Port the inference and fusion to a DeepStream custom plugin for higher throughput and robust pipelines (nvinfer + nvstreammux + custom thermal probe).

- Power-aware modes:

- Dynamically switch nvpmodel (or lower FPS) at idle hours; wake up to MAXN on motion near the perimeter.

Checklist

- [ ] Verified JetPack (L4T) version and TensorRT presence with cat /etc/nv_tegra_release and dpkg -l.

- [ ] Set performance mode: sudo nvpmodel -m 0; sudo jetson_clocks; verified with tegrastats under load.

- [ ] CSI camera (Arducam IMX477) streaming via GStreamer nvarguscamerasrc at 1280×720@30.

- [ ] I2C bus shows MLX90640 (0x33) and BME680 (0x76/0x77) with i2cdetect.

- [ ] Built YOLOv5s FP16 TensorRT engine with trtexec; confirmed inference latency ≤15 ms.

- [ ] main.py runs, logs runs/log.csv, prints alerts when person enters perimeter with thermal confirmation.

- [ ] Achieved ≥20 FPS end-to-end and stable GPU utilization; minimal dropped frames.

- [ ] Restored clocks and power mode after tests: sudo jetson_clocks –restore; sudo nvpmodel -m

.

By following this case, you deploy a fused RGB-thermal perimeter intrusion detector on the NVIDIA Jetson Orin Nano 8GB using the Arducam IMX477, MLX90640, and BME680, with TensorRT-accelerated YOLOv5s, explicit power management, and quantitative validation for repeatability.

Find this product and/or books on this topic on Amazon

As an Amazon Associate, I earn from qualifying purchases. If you buy through this link, you help keep this project running.